What does BigO Notation entail? For Beginners: A Simplified Introduction

In programming, there can be a thousand and one ways to solve a problem.

Let's imagine you want to know if a number is even or odd. To check for even, choose whether the number ends in zero or is divisible by two without a remainder. There could possibly be other options.

But, in the case of programming, how do you know which solution is best and how can you compare the efficiency of one solution to another? That's when BigO's knowledge comes in handy. BigO is a crucial notion in computer science since it allows you to study and compare methods for efficiency and scalability. It's commonly labeled as a difficult topic, especially for people without a background in computer science like myself.

In this article, I share my most fundamental grasp of the subject. So, if this is your first time learning about BigO, grab a cup of coffee and relax while we learn together as we sail this "BigO" ship.

What is BigO?

BigO notation is a mathematical representation that shows the time it takes an algorithm to run as the size of the input rises (time complexity) and how much space it takes up (space complexity).

In simpler terms, it tells you how fast an algorithm is.

It's pronounced big-O-of (whatever is inside the parenthesis).

BigO explanation using a search analogy

Suppose you want to search for the word "omega" in a dictionary. You could decide to start looking from the beginning, moving from A to B, C, and so on until you reach O - (let's name this approach A). However, it's more likely that you'll skip searching from A and start searching from the center -(let's name this approach B). Because you must go through each alphabet until you reach "O," Approach A will take longer than Approach B.

Let's say we wish to do a similar search on a larger set of collections, such as a massive database like Facebook's. Consider the following scenario: you log on to Facebook and it asks you to enter your username. To verify your username, It must search through its database. Let's say your username is "verifiedvee"; it could start searching from the A's, but it's better to start from the center. It's important to notice that both approaches A and B are viable options here.

However, after examining both algorithms (method A and B), we may conclude that technique B solves the search problem in fewer steps. In BigO words, we can say that in the worst scenario, approach A (simple or linear search) will take n steps, but option B (binary search) will take log n steps.

Recall that logarithms are the inverse of exponentials. So, log₂(x) is the number of 2s that equals x.

BigO centers on the worst-case scenario of an algorithm.

BigO notation is used to denote an algorithm's upper bound run time, commonly termed the worst-case scenario. What do you believe the worst-case situation would be, using the search analogy we used earlier? Let's try to picture it together. What happens if the username isn't found in the database? How long would the algorithm take to run?

Using approach A, the program will search through all of the usernames in the database.

Using approach B, the program will search the database but it will skip certain usernames because it will not start from 'A.'

As a result, even under the worst-case situation, approach B performs fewer operations than approach A.

Other case scenarios for an algorithm's performance that aren't covered in this article include the best-case scenario, represented as Big Omega or Ω(n), and the average-case scenario, represented as Big Theta or ϴ(n).

BigO does not consider an algorithm's runtime as a measure of its speed in seconds.

BigO is a method to estimate how quick an algorithm is. It is important to note, however, that BigO does not quantify algorithm speed in seconds. Calculating an algorithm's speed using time-based measurement is ineffective. This is due to the fact that the performance of an algorithm might vary based on a number of factors, including the machine, programming language constructs, operating system, and so on. We also want to know how long our algorithm takes to execute so we can see how it scales as the input size grows. BigO allows you to compare the number of operations instead of examining an algorithm's performance across time. So, it's written O(n) where n equals number of operations.

What is Time and Space Complexity?

We've already established that there can be multiple solutions to a problem. However, we examine the time and space complexity because we want to choose the best one.

The time complexity of an algorithm is a measure of how long it takes to run in relation to its input length/data size. This is the focus of the article.

The space complexity of an algorithm, on the other hand, is a measure of how much memory or space an algorithm uses as it runs in relation to its input length/data size.

How do we use BigO Notation to classify Algorithm Runtime?

Let's look at different algorithm runtime classifications and how they're represented using Big O.

Constant Time Complexity (O(1))

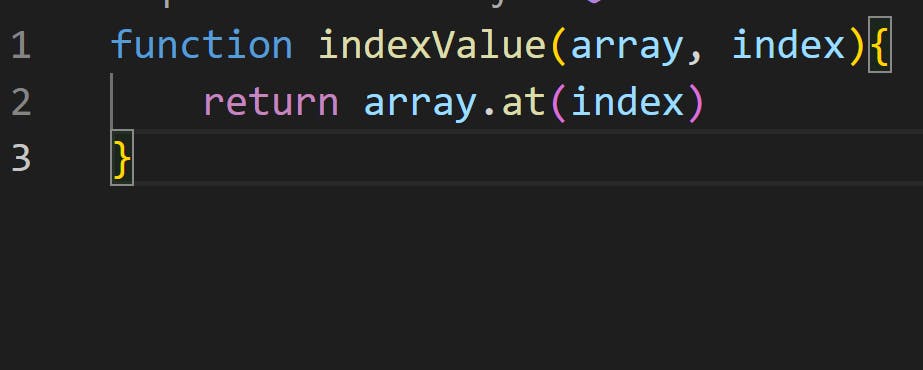

When an algorithm's operation or run time remains constant regardless of the input size, we say it has O(1) complexity. A basic example is to return the value of an array using its index.

Only one operation is performed in the code example above. This function will only return the value at that position, regardless of the length of the array we pass it. Among other factors, O(1) time complexity is considered the best.

Linear Time Complexity (O(n))

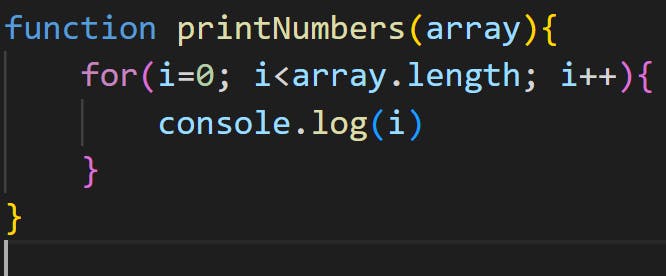

The runtime and input size are linearly related in an algorithm with O(n) complexity. As a result, the runtime increases as the input size grows. An example of this would be to print all values in an array.

The for loop action will be performed on each element in the array in the example above. Consider the same operation with more data. As a result, the algorithm's runtime is proportional to the array length, giving it a linear time complexity.

Logarithmic Time Complexity O(log n)

This complexity is related to linear time complexity but differs somewhat. The runtime increases as the input size expands, although at a slower rate. An example of this is doing a binary search on a sorted array. Binary search works by halving the list and then searching through the remaining items. When compared to linear time complexity, the runtime is drastically reduced.

Quadratic Time Complexity O(n^2)

A quadratic time complexity method grows at a rate of n^2. This means that if the input size is 2, the number of operations becomes 2^2, which is 4. This complexity is common with nested data.

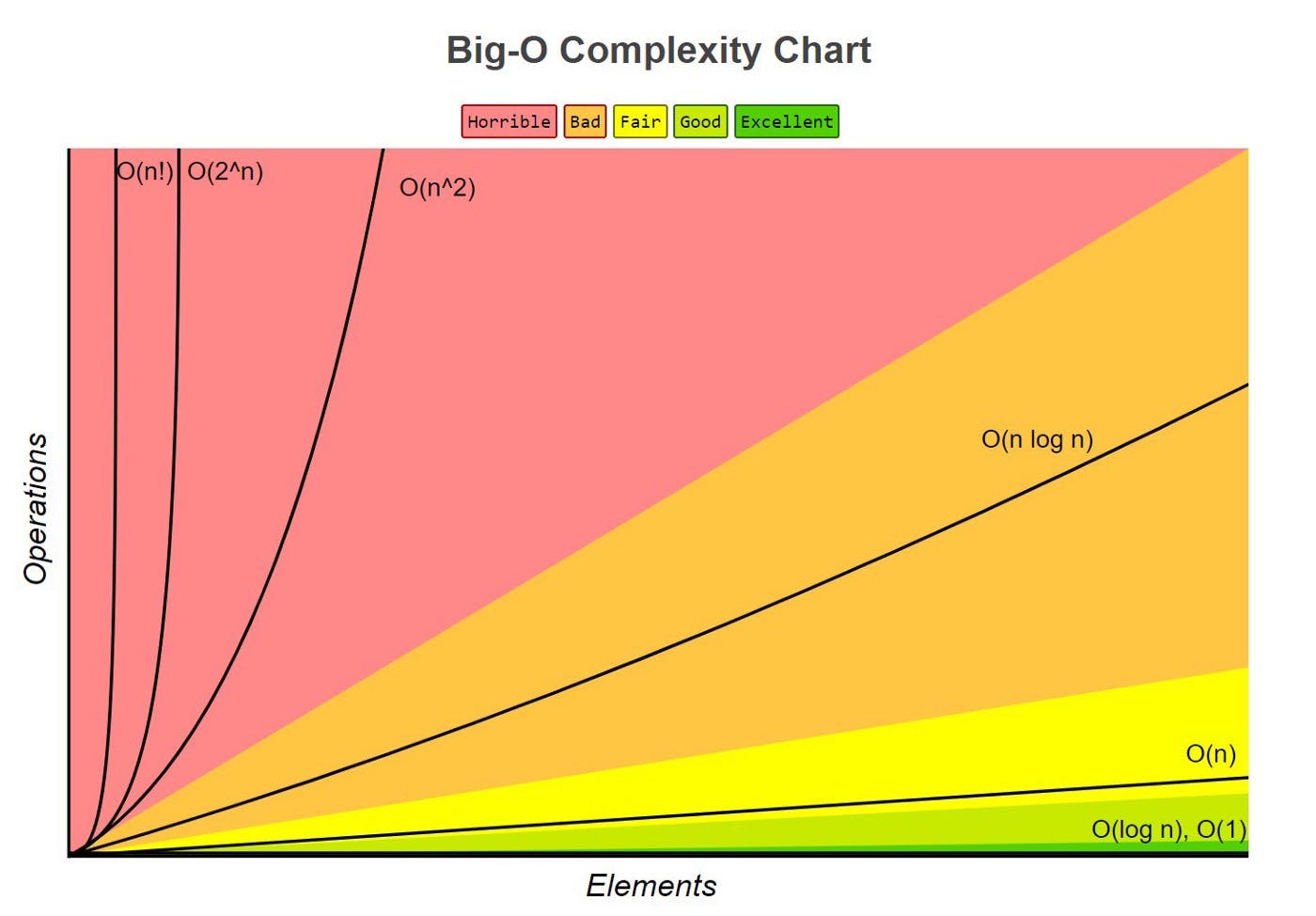

Other notations for time complexity include factorial time complexity, exponential time complexity, and so on. The graph below shows the situation state of various runtime complexity.

Image source: bigocheatsheet.com

Image source: bigocheatsheet.com

You can also find some common data structures and algorithms complexity classification here.

Conclusion

This article does not cover everything there is to know about BigO notation. And, while it may be tough to imagine mentally at first, knowing why and how it works makes it easier to comprehend. Knowing the time and space complexity of an algorithm also helps you write more efficient and scalable code. As a result, you become a better programmer.

Do you agree with this point? Please let me know in the comments.